To Protect Ourselves From Bioweapons, We May Have to Reinvent Science Itself

In June 2012, a team of researchers from the University of Wisconsin published a paper in the journal Nature about airborne transmission of H5N1 influenza, or bird flu, in ferrets. The article changed the way the United States and nations around the world approached manmade biological threats.

This was not the researchers’ intent.

The team had altered the virus’s amino acid profile, allowing it to reproduce in mammal lungs, which are a bit colder than bird lungs. That small change allowed the virus to be transmitted via coughing and sneezing, and it solved the riddle of how H5N1 could become airborne in humans.

The U.S. government initially supported the work through grants, but members of Congress, among other critics around the world, responded to the publication of the research with alarm and condemnation. A New York Times editorial described the experiment and similar research conducted in the Netherlands, eventually published in the journal as "An Engineered Doomsday." So the researchers agreed to a voluntary moratorium on their findings. In October, the White House Office of Science and Technology Policy announced that it would halt funding for research into how to make diseases more lethal — so-called “gain-of-function” studies — and asked anyone doing such research on deadly diseases to cease and desist.

Hopefully, before anything happens, the good guys will get better at new pathogen detection and immunity soon — both to prevent this scenario and naturally emerging infectious diseases.George Church, Harvard Medical School

The White House moratorium was not a direct response to the original University of Wisconsin study so much as it was an answer to a series of embarrassing incidents that included improperly handling contaminated wastes, accidentally shipping dangerous pathogens, and “inventory holdovers” at government labs. Nevertheless, the Wisconsin study features prominently in the current discussion within government and labs around the world about the costs and benefits of certain types of scientific inquiry.

Why do research on how to make the world’s most dangerous viruses and bugs more lethal? The answer varies tremendously depending on who is asking and for what purpose the research is taking place. While experts differ in their views on how and where such work should be done, there is wide agreement that the barriers to entry for new biological creations, including ones that could kill millions of people, are decreasing.

Today, there is little international enforcement of limitations on bioweapons. For chemical materials like sarin gas, the Chemical Weapons Convention provides a treaty-based legal framework for stopping proliferation, and a watchdog group, the Organization for the Prohibition of Chemical Weapons, to investigate potential violations. No similar watchdog exists around biological weapons. All but a few countries have ratified the Biological and Toxin Weapons Convention, for instance; among the no-shows is Syria, where President Bashar al-Assad’s regime is suspected of harboring strains of smallpox for research.

“In light of this, it is indeed Assad's biological weapon complex that poses a far greater threat than his chemical-weapons complex,” wrote bioweapons expert Jill Bellamy van Aalst and GlobalStrat managing director Olivier Guitta in The National Interest in 2013. Some of the biological research that the Assad regime was conducting was based in Homs, Van Aalst and Guitta wrote, putting smallpox samples or possibly rudimentary bioweapons within reach of the Islamic State, or ISIS.

Dangerous Research And Unknown Unknowns

The genetic engineering of deadly pathogens is not the sort of thing that a terrorist or would-be supervillain could easily attempt in a kitchen. But the quickening pace of genetics research has plenty of scientists worried. Suzanne Fry, director of the Strategic Futures Group at the Office for the Director of National Intelligence, told a group at last month’s SXSW technology conference in Austin, Texas, that synthetic biology was a big concern among many of the technologists she’s been interviewing recently. “Some very, very prominent scientists have said that that worries them very much,” she said.

George Church, a Harvard Medical School researcher widely considered a father of modern genetic research, offered a somber assessment of the future of genetically engineered bioterror. “How would we have calculated the odds of the events on 9-11-2001 on 9-10-2001?” he said via email, “or the Aum Shinrikyo [Tokyo subway attacks]? Hopefully, before anything happens, the good guys will get better at new pathogen detection and immunity soon — both to prevent this scenario and naturally emerging infectious diseases.”

Members of the Department of Defense's Ebola Military Medical Support Team dress with protective gear during training at San Antonio Military Medical Center in San Antonio.

There’s a difference between building better detection kits and figuring out how to engineer new and more lethal versions of familiar viruses. But these areas of exploration are not always distinct. The best and most reliable Ebola detection devices work off of the virus’s unique genetic signature, giving a level of certainty unmatched by fever scanners and other symptom trackers. A new Ebola-like pathogen with a unique genetic signature might be undetectable to the most up-to-date devices and methods.

The H5N1 case shows how a once-difficult challenge is becoming exponentially easier because published results move so quickly in the age of digital interconnection, according to Gaymon Bennett, an Arizona State University religious studies professor and biotechnology expert who has written extensively on synthetic biology.

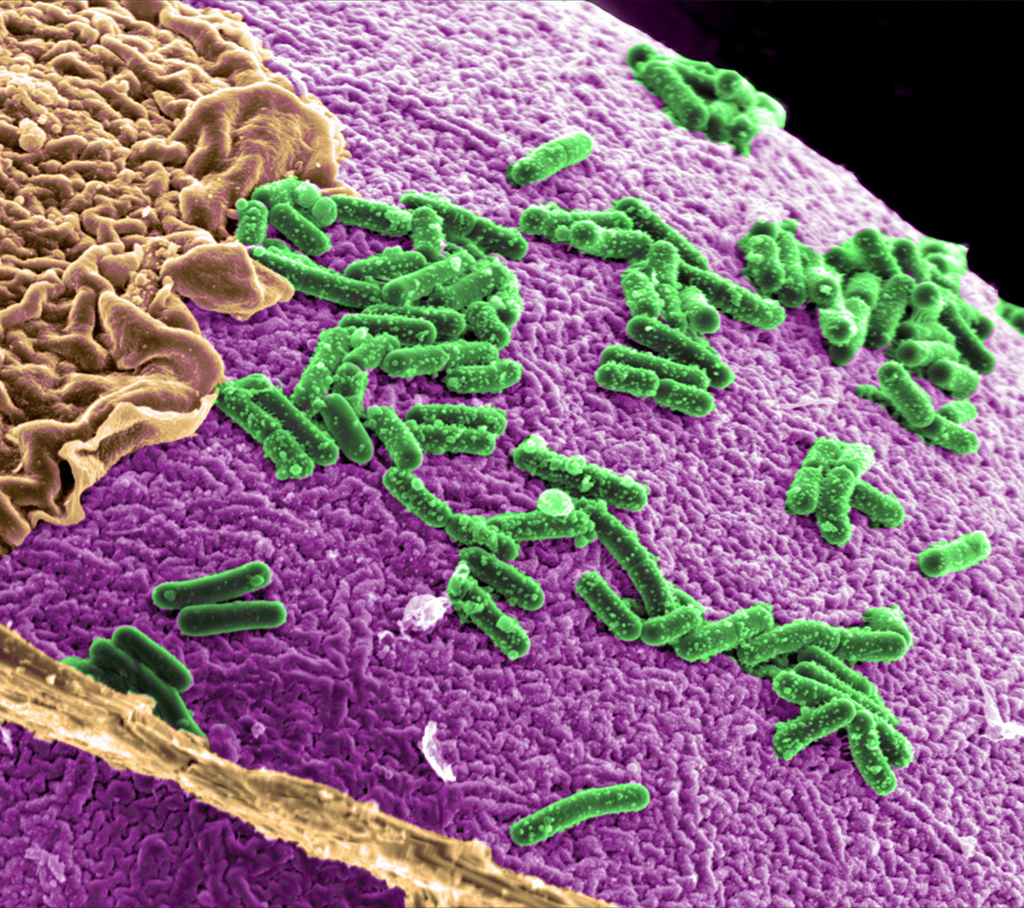

An image of intestinal flora.

“It took specialized facilities and millions of dollars” for University of Wisconsin researchers to figure out how create the amino acid sequence that would allow the virus to reproduce in mammal lungs, he said. “But once you publish the sequences … once they’ve done that work, it would take a competent physician a few thousand dollars and few weeks to reproduce the result.”

Richard Danzig, a former Navy secretary, says that he understands the impetus towards moratoriums. He points to the years following the Manhattan Project, when research was classified and researchers monitored, as an example of a research control regime that worked. But today, the Internet makes implementing a similar control regime much harder, Danzig said at a 2013 Atlantic Council event. “Our capabilities to control information seem inadequate, so that the distortive effects of those kinds of controls tend to outweigh, I think, the positive effects,” he said. “It turns out that the information leaks in a hundred ways or is independently recreated in a global world outside the reach of your jurisdiction.”

For the military, the reasons to conduct gain-of-function research may outweigh reasons to suppress it. Military leaders are fond of saying that they don’t want to ever find themselves in a fair fight – they always want the advantage. In the context of biological threats, that means understanding how to weaponize Ebola even if international laws and treaties, like the Geneva Convention, prohibit the use of such weapons in the field. Of course "understanding" is different from actually developing a weaponized Ebola strain, which is illegal under the Biological Weapons Convention.

Last year, the Pentagon’s Defense Advanced Projects Research Agency, or DARPA, opened a biological technologies office to explore issues such as synthetic biology and epidemiology. Its work includes everything from creating new high-energy density materials from organic matter to exploring how diseases spread and become more dangerous. DARPA Director Arati Prabhakar recently said she created the office because of the rising potency of biotechnology powered by information technology. “When I say I see the seeds of technological surprise in that area, that’s exactly what I mean. Hugely powerful technologies are bubbling out of that research.”

Prabhakar said the agency constantly considers the political and scientific controversies surrounding biotechnology research, but that it isn’t in the interests of DARPA or the nation to hold back because of controversy. “We’re responsible to be in those areas but we’re also responsible for raising those issues and convening that dialogue. What we’ve done that’s been very helpful is tap experts in different areas. We have experts in neuroethics, other experts who are very smart in synthetic biology and law, policy and societal issues … but DARPA is not going to make up answers to these societal choices. That’s a much broader undertaking.”

The cheapest and most effective way to do that research is to open it up to more scientists and better publication opportunities. If the U.S. doesn’t, someone else might.

“It’s a huge dilemma for our military,” said Gary Marchant, professor of emerging technologies, law, and ethics at Arizona State University. He spoke at New America Foundation’s Future of War conference in February. (Defense One is a media partner of the foundation’s.) “We know that there’s going to be people out there who won’t follow those same self-imposed restrictions. To understand what those threats are and be able to counter them do we need to make the monster ourselves? And when we do this stuff, do we publish it?”

The problem of how to control bioterror information in the Information Age is complex and important enough to cause a shift in science itself, according to some members of the research and university community. At very least, it’s enough to prompt a change in the way in which some scientific research happens.

Our capabilities to control information seem inadequate, so that the distortive effects of those kinds of controls tend to outweigh, I think, the positive effects.Richard Danzig, former Navy Secretary

One of the basic principles of science is the idea that all research conducted in accordance with sound scientific methods – and that does not directly harm any humans or put them at risk – adds value to civilization. Of course, a terrorist or nation-state could use the product of such research to harm innocents. But the potential for misuse should not prevent the research in the first place.

The Wisconsin study has caused some to question the infallibility of that centuries-old approach.

Predicting What Science Will Do Before It Does It

Instead of trying to figure out how to contain potentially dangerous bioterrorism discoveries, one alternative gaining support is trying to predict – or at least try to forecast or model – the outcomes of research before it occurs.

“We’ve been working on a tool in our consortium of science policy and outcomes where we’ve been thinking through: How would you think about the implications of a technology at the time that it became scientifically feasible … not doable but feasible … how would you think through all of the implications and then guide the evolution of the technology so that you then get these … unalterable outcomes that could effect the entire species,” ASU president Michael Crow said at the Future of War conference.

He envisions that this tool, called Real-Time Technology Assessment, or RTTA, would expand the different inputs that go into the discussion that universities, labs, research communities and individuals have about what research to pursue. Some of the inputs might be social, some technical, some political and so on. Importantly, says Crow, the effect of the assessment would not necessarily be to limit or torpedo any particular effort or give sociologists veto power over what sort of topics chemists pursue. Rather, the intent is to create the fullest picture possible of the total effects of the research. “Image that we avoid conflict in the future by re-thinking how we do science now. Not taking away the fundamental discovery of aspect.”

David Guston, an ASU political science professor, invented the RTTA concept in a seminal paper co-written with Daniel Sarewitz. The authors describe the tool as a system that uses opinion poling, focus groups, and futurist methodologies like scenario planning and socio-technical mapping to explore ways that different people may respond to the scientific or technological innovation under consideration.

RTTA is “about using a fairly traditional set of social science research tools to help create more thoughtful consideration on the part of scientists and engineers of the choices they make in the laboratory,” Guston said.

If the process works correctly, the researchers – and everyone who may be harmed or benefit from the research – has a clear and shared understanding of how the technology, innovation, or experiment may change life on this planet, no matter who undertakes it.

Why do we need it?

By way of example, Guston cited some of Church’s work: the Harvard researcher recently unveiled a novel strain of E. coli bacteria that needs an artificial amino acid to survive, and so the amino acid serves as an on/off switch. The paper that Church co-wrote with several other authors marks a foundational contribution to the future development of synthetic life forms. It shows one method by which humans can assert control over the survival and reproducibility of human-engineered organisms at the genetic level. Sounds like an obvious safety feature that should make its way into all future synthetic biology research, right? It is — until you consider how even benign innovations can change in the hands of resourceful adversaries. Such consideration doesn’t fall within the parameters of the normal research process, but it’s precisely what RTTA seeks to provide.

“Because the threat of the spread of such organisms is reduced in this way, it is possible that bad actors who want to create a dangerous novel bioweapon would be able to practice doing so without the risk that would normally accompany working with bacteria that could reproduce outside the lab and therefore be safer and more secure in developing that weapon,” Guston wrote. “The real trick is to ask these questions as the research is going on, and not once you have the product in hand.”

Guston and Sarewitz shy away from the word “prediction,” preferring “anticipation.” RTTA does have some very predictive elements, though it’s far from prognostic. “RTTA explicitly distances itself from prediction—if reliable prediction were possible, RTTA wouldn’t be necessary. RTTA is a corrective, however, to the common notion that because scientific and technological futures are not knowable in detail, nothing can be done to anticipate or prepare for them,” said Sarewitz.

Guston and Sarewitz have received a fair amount of pushback from the mainstream scientific community for this idea.

“When Dan and I first generated these ideas in 2000-01, we submitted a proposal to the National Science Foundation that contained the rudiments of this work,” he said. “The reviewers were mostly quite hostile to the ideas we were espousing, indeed seeing them as hostile to the idea of free science in some instances. Over the years that we have presented this work, I have received numerous questions about whether this means that I'm hostile to basic, or to fundamental, or to curiosity-driven research, and the answer is no, that's beside the point. What we're talking about here is a coherent and self-conscious version of what goes on anyway, with a broader aspect of participation and understanding of what relevant expertise is.”

To Guston, the RTTA is really just a modern version of common-sense safety precautions, like goggles, biosafety suits, or the institutional review board. “Creating risk for people outside of the laboratory is not part of the scientific ethos,” he said.

When I say I see the seeds of technological surprise in that area, that’s exactly what I mean. Hugely powerful technologies are bubbling out of that research.DARPA Director Arati Prabhakar

Gain-of-function research remains open to a very small number of labs, as does genetically engineering new and forms of E. coli, or researching the genetic root of autobiographic memory, extreme height, etc. But biological knowledge is just knowledge. Today’s Kickstarter project on engineering glowing plants (by injecting them with the enzyme luciferase) is tomorrow’s billion-dollar blockbuster drug or bioweapon.

It may be time to start talking about science differently – at least when it comes to national security. The good news is this: if the current predicament conclusively reveals anything about the future, it’s that science will survive even these attempts to better predict it.

CORRECTION: The original version of this article stated that in January 2012, a team of researchers from the Netherlands and the University of Wisconsin published a paper in the journal Science about dangerous forms of avian influenza. There were, in fact, two separate studies: one by a Dutch team published in May 2012 inthe journal Science and one by a University of Wisconsin team published in June 2012 in Nature.

Additionally, the report inadvertently suggested that H5N1 had already become airborne in humans. The research showed how it could become airborne in humans.

Tell us what you think.

Find us on Twitter @defenseone

And get the latest from Defense One delivered right to your inbox every morning by signing up for our daily newsletter.